If you are a linguist or a translator, I am pretty sure that you missed a terminology extraction tool at some point in your path. I remember having made wishes —if I could make a glossary out of this text, I could run a terminology check at the end of the project! And manually creating a glossary was such a time-consuming task that the efforts weren’t worth. But everything has changed nowadays.

There is a before and after the creation of language models. High-scale computing has also been crucial, as well as open source projects. Put just these three things together and we have the great cocktail: superpower tools available for everyone, included you and me. Yes, that’s right. Some basic programming skills are required, but you don’t need to write hundreds of lines of code in order to create efficient tools.

This is the case of a terminology extraction tool that I have built up using different Python libraries. My translation and localization life is much easier today thanks to this tool, so I would like to share the results of my research and the web app that I have developed using several Python libraries like Spacy, NLTK, Transformers, Pandas or Streamlit to make the main library work: KeyBERT.

If want to go directly to test the too, you will find it in the Terminology Extraction section of my LocNLP23Lab. If you prefer to get started with the basics of KeyBert, please keep reading.

KeyBERT is a tool that allows you to extract key terms from a given text using the power of BERT, which stands for Bidirectional Encoder Representations from Transformers. BERT is a Machine Learning (ML) model for natural language processing developed in 2018 by researchers at Google AI Language.

In this tutorial, I will show you how to use KeyBERT to extract key terms from a piece of text. You can see below the link to the project. Thank you, Maarten Grootendorst, for creating such a useful tool!

Let’s see a quick and simple way to make use of this tool. No need to say that you will need some basic Python knowledge to make it run.

Prerequisites

Before we begin, you will need to install KeyBERT and its dependencies. KeyBERT is built on top of the BERT model, so you will need to install the Python package and import the library. You can do this by running the following commands:

pip install keybertExtracting Key Terms

To extract key terms with KeyBERT, you will first need to import the KeyBERT class from the bert_extractive_summarizer package. Then, you can create an instance of the KeyBERT class and use its extract_key_terms method to extract key terms from a piece of text.

Here is an example of how to use KeyBERT to extract key terms from a piece of text with just a few lines of code:

from keybert import KeyBERT

docs = ["""What is renewable energy?

Renewable energy is energy derived from natural sources that are replenished at a higher rate than they are consumed. Sunlight and wind, for example, are such sources that are constantly being replenished. Renewable energy sources are plentiful and all around us.

Fossil fuels - coal, oil and gas - on the other hand, are non-renewable resources that take hundreds of millions of years to form. Fossil fuels, when burned to produce energy, cause harmful greenhouse gas emissions, such as carbon dioxide.

Generating renewable energy creates far lower emissions than burning fossil fuels. Transitioning from fossil fuels, which currently account for the lion’s share of emissions, to renewable energy is key to addressing the climate crisis.

Renewables are now cheaper in most countries, and generate three times more jobs than fossil fuels.""",]

# Init KeyBERT

kw_model = KeyBERT()

kw_model.extract_keywords(docs[0], stop_words=None)

[/content_box]

The output should be something like:

[('renewables', 0.6129),

('renewable', 0.6057),

('fuels', 0.4669),

('energy', 0.4032),

('coal', 0.3385)]Nice, isn’t it? But that’s just a few individual words. We need phrases too. So let’s adjust some more things to get better results, like the n-grams.

Customizing the Key Term Extraction

You can customize the key term extraction process by passing additional parameters to the extract_key_terms method. For example, you can specify the number of key terms to extract by setting the top_=5 parameter. By adding keyphrase_ngram_range=(1, 2) in the extractor, you can also select how many n-grams the terms should have.

Here is an example of how to customize the key term extraction process:

# Extract the top 5 key terms with a 1 to 3 n-grams

kw_model.extract_keywords(docs=docs, stop_words=None, keyphrase_ngram_range=(1, 2), top_n=5)

This will output the top 5 key terms for each text, despite the results could be improved:

[[('renewable energy', 0.7132),

('energy renewable', 0.6843),

('renewable resources', 0.6439),

('is renewable', 0.6307),

('renewables', 0.6129)],

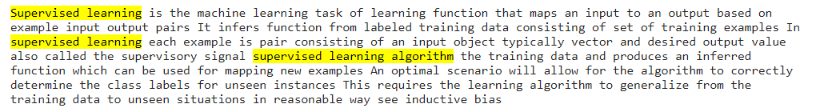

[('supervised learning', 0.6779),

('supervised', 0.6676),

('signal supervised', 0.6152),

('in supervised', 0.6124),

('labeled training', 0.6013)]]

# You can even highlight them in the text by using the attribute 'highlight=True'.

keywords = kw_model.extract_keywords(docs[1], keyphrase_ngram_range=(1, 3), highlight=True)

As you can see, the results are still not perfect. This is because we need to set up the number of n-grams. The user doesn’t know how many terms will have. So why not letting the machine choose for us?

Using Keyphrase-vectorizers

In order to let the computer choose the best number of n-grams for the text, we will use Keyphrase-vectorizers. It’s simply great: it converts all words to vectors and computes them to find the most-common pattern. Let’s see how to do it in a simple way.

from keyphrase_vectorizers import KeyphraseCountVectorizer

kw_model.extract_keywords(docs=docs, vectorizer=KeyphraseCountVectorizer())We have basically replaced the n-grams option with the vectorizer, and it seems to work, if you have a look at the output:

[[('renewable energy', 0.7132),

('renewable energy sources', 0.6512),

('renewables', 0.6129),

('fossil fuels', 0.5509),

('harmful greenhouse gas emissions', 0.4041)],

[('supervised learning algorithm', 0.6992),

('supervised learning', 0.6779),

('training data', 0.5271),

('training examples', 0.4668),

('class labels', 0.389)]]Isn’t it magic? I love it! ❤

Last word

In this quick tutorial, I’ve showed you how to use KeyBERT to extract key terms from a piece of text. KeyBERT is a useful tool that allows you to quickly and easily extract key terms from any text, making it a valuable tool for any NLP engineer, and why not, for any translator or linguist.

If you want to dig deeper in the tool, have a look at these articles:

- Keyword Extraction with BERT by Maarten Grootendorst

- How to Extract Relevant Keywords with KeyBERT by Ahmed Besbes

- Keyphrase Extraction with BERT Transformers and Noun Phrases by Tim Schopf

![[post-views]](https://www.veriloquium.com/wp-content/uploads/2025/02/DALL·E-2025-02-26-11.58.33-A-modern-minimalistic-digital-illustration-for-Real-Quality-Management-RQM-in-localization-and-translation-QA-using-a-clean-and-subtle-color-schem-300x200.webp)